AI on Social: What Audiences Accept and Where the Line Is

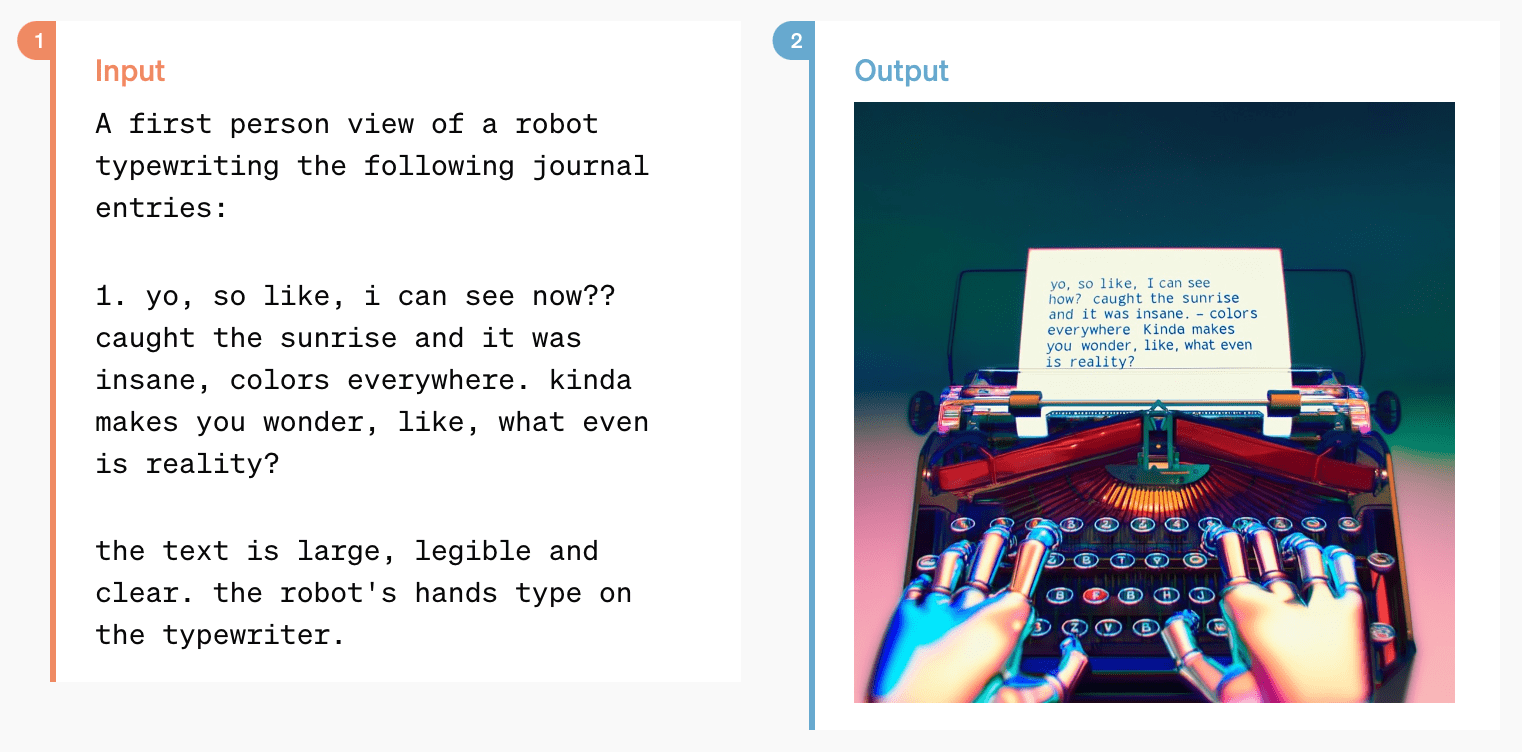

Artificial intelligence has become part of everyday social content creation. From automated editing tools to AI assisted visuals and captions, the technology now sits behind everything from influencer content to brand campaigns. Yet acceptance is far from universal. People are still working out what feels helpful, what feels uncomfortable and what feels dishonest.

Insights from our 2026 B2C and B2B research show a clear pattern. Audiences are open to AI when it improves their experience, but they pull back when it feels deceptive or too synthetic. The Social Media Trends Playbook reinforces the same point. AI works best when it supports human creativity rather than replacing it.

Understanding these boundaries is essential for any brand using AI on social in 2026.

Audiences are split on AI in influencer content

In the B2C survey, 41.6 percent of people say that influencers using AI does not affect their trust. However, almost the same proportion, 40.3 percent, say it reduces their trust. This split shows that AI is not inherently good or bad. It depends entirely on how and where it appears.

There is also concern about transparency and honesty:

• 26 percent worry that AI generated content can be deceptive

• 45.5 percent want brands and creators to disclose when AI is used

People do not object to the existence of AI. They object to being misled. When the human role becomes unclear or hidden, trust falls sharply.

Marketers are navigating the same tension

The B2B survey shows that businesses are also caught between the opportunities and the risks of AI use.

• 37.1 percent feel only somewhat confident using AI

• 48.6 percent are concerned about data privacy

• 45.7 percent are worried about losing authenticity

Yet adoption is growing quickly. 42.9 percent of marketers already use AI for image or video creation and 37.1 percent use it for copywriting. Most teams see AI as a tool that can speed up workflows and expand creative output, but they are also wary of crossing into territory that feels artificial or off brand.

Marketers also recognise the irreplaceable strengths that humans bring:

• 51.4 percent say storytelling and tone are best handled by humans

• 40 percent say community engagement requires human judgement

• 37.1 percent say trend spotting is stronger when led by people

This aligns with the Playbook’s perspective. AI is powerful, but it does not understand emotion, intention or cultural nuance in the same way people do.

Why audiences hesitate when AI enters the mix

There are several reasons people pull back when they sense too much automation.

1. Fear of deception

AI can manipulate visuals, voices and scenarios. People worry about content that looks real but is entirely artificial.

2. Loss of human texture

Authenticity matters. When content feels algorithmic rather than personal, it loses emotional impact.

3. Data privacy

Concerns around how AI tools use personal data are widespread across both consumers and marketers.

4. AI sameness

The Playbook notes an increasing fatigue with AI visuals that look too similar or too perfect.

What audiences accept from AI

Despite the concerns, people generally welcome AI when it clearly improves their experience without compromising honesty.

Accessibility

Transcriptions, subtitles, translations and audio descriptions are widely appreciated.

Quality enhancement

Colour correction, background noise reduction and editing support feel helpful rather than deceptive.

Efficiency

People are comfortable with AI that speeds up content production as long as the final result still feels human.

Transparency

Clear disclosure improves trust and is often expected.

Where audiences draw the line

Acceptance stops when AI crosses into territory that feels dishonest or overly synthetic.

Synthetic influencers

Many people are uncomfortable with avatars that imitate real humans.

Entirely machine made storytelling

Audiences still want personality, emotion and perspective.

Fabricated reviews or testimonials

Any hint of misinformation destroys trust.

Hidden AI involvement

Lack of disclosure is one of the strongest negative triggers.

How brands can use AI responsibly in 2026

To stay on the right side of audience expectations, brands should treat AI as a supportive partner rather than a creative engine.

Keep human creativity at the centre

People connect with people, not machines. Human voice, tone and judgment should lead.

Be transparent

Disclosing AI use builds credibility and removes any sense of deception.

Use AI where it enhances clarity

Improve accessibility, streamline editing and speed up workflows rather than generating entirely synthetic content.

Avoid relying on AI for emotional storytelling

Humans should guide narrative and nuance.

Test and evaluate

Audience reactions differ by category and platform. Testing prevents missteps and helps refine AI strategy.

The future of AI on social is hybrid

One thing is clear across all our insights. The strongest content in 2026 will be created through the combination of human perspective and intelligent tooling. People are open minded about AI, but they draw the line at anything that replaces human intention or feels misleading.

AI can make content faster, sharper and more efficient, but trust will always come from people. Brands that strike the right balance will lead in authenticity, creativity and relevance throughout 2026.

Explore Our Latest Insights

Stay updated with our latest articles and resources.

Ready to elevate your marketing strategy?

Let’s add some spice to your next campaign 🌶️

.png)